At this point, everyone seems to have heard of ChatGPT 3, the breakthrough artificial intelligence engine released in November 2022. A product of the OpenAI consortium, this online tool can generate long-form prose from simple prompts, with results often indistinguishable from human efforts. A New York Times column reported that ChatGPT “creates new content, tailored to your request, often with a startling degree of nuance, humor and creativity.”[1] The bot thus commonly passes the Turing Test: it can convince users that it is human.

Many observers greeted ChatGPT with something close to terror. Our dystopian future, they declared, will be the inverse of that foretold by The Jetsons. Instead of cheerful robot servants freeing us from manual drudgery, robots will replace us as writers, artists, and thinkers. Domestic labor and farm work will become the last arenas where humans surpass our robot creations. Our niche will shrink to that of Romba assistants, tidying up the spots missed by robot vacuums. While some of these commentaries were arch, many were earnest. Writing in the MIT Technology Review, Melissa Heikkilä explicitly posed the question “Could ChatGPT do my job?”[2] In the New York Times, Frank Bruni wondered “Will Chat GPT Make Me Irrelevant?”[3]

Many academics echoed these alarms. “You can no longer give take-home exams,” wrote Kevin Bryan, a professor of management based at the University of Toronto. Samuel Bagg, a University of South Carolina political scientist, suggested that ChatGPT3 “may actually spell the end of writing assignments.”

I dissent.

At its core, ChatGPT is just a predictive text algorithm. Simple predictive text engines are ubiquitous. In the common email client Microsoft Outlook, for example, if I write several lines of text (an indication that it might be time for a conclusion), and then type “please get”, the software immediately suggests “back to me.” If I accept that suggestion, it offers “as soon as possible.” This is not scary or surprising. The algorithm is simply relying on predictable prose patterns. The best predictive text algorithms are adaptive. You have likely experienced how your phone’s texting feature improves with time. That is simply a product of the software incorporating the probabilities of your writing habits. If I routinely write, “Please get me a taco and change the oil on my car,” a good algorithm will accordingly change the autocomplete suggestions for “Please get.”

ChatGPT3 is nothing more than those familiar text algorithms but at a massive scale. It seems different because size matters. The textbase is roughly 300 billion words, and the computational costs to train the engine (essentially the electric bill) ran into millions of dollars. Unlike familiar predictive text methods, which look at a few words (e.g., “please get” prompts “back to me”), ChatGPT3 accepts, and returns prompt with thousands of words.

That difference in scale obscures key similarities. After all, although both are primates, a 400 lb. mountain gorilla is threatening in ways that a 5 lb. ring-tailed lemur is not. But the fact that ChatGPT3 is just a predictive text algorithm is essential to appreciating its limits. Gorillas, however large and scary, are primates and share the core limits of that order: they cannot fly, live underwater, or turn sunlight into starch. In the same way, ChatGPT does not “think.”

No predictive text algorithm, no matter its scale, can write anything new. The scope of ChatGPT3 conceals that it is just a massive “cut and paste” engine, auto-completing our text prompts based on billions of pages scraped from the internet. That is both its great power and its core limitation. ChatGPT3 is devastatingly “human” at the most mundane “cut and paste” aspects of writing. It will likely automate many forms of “compliance writing” (certifications that a person or organization conforms to rules and regulations), as well as customer service letters, legal forms, insurance reports, etc. Of course, many of those tasks were already partly automated, but ChatGPT3 has radically streamlined the interface. By extension, ChatGPT can resemble a human student and earn a solid B+ when responding to any question that has an established answer. If there are thousands of examples on the internet, ChatGPT will convincingly reassemble those into seemingly human prose.

ChatGPT thus poses a real but energizing question for teachers. If ChatGPT is most human-like when answering “cut and paste” questions, why are we posing such questions? Adapting to ChatGPT requires not a ban on the software, much less a retreat into an imaginary past before computers, but merely some healthy self-reflection. If we are genuinely teaching our students to think and write critically, then we have nothing to fear from ChatGPT. If our test questions can be answered by ChatGPT, then we aren’t requiring critical reading or thinking.

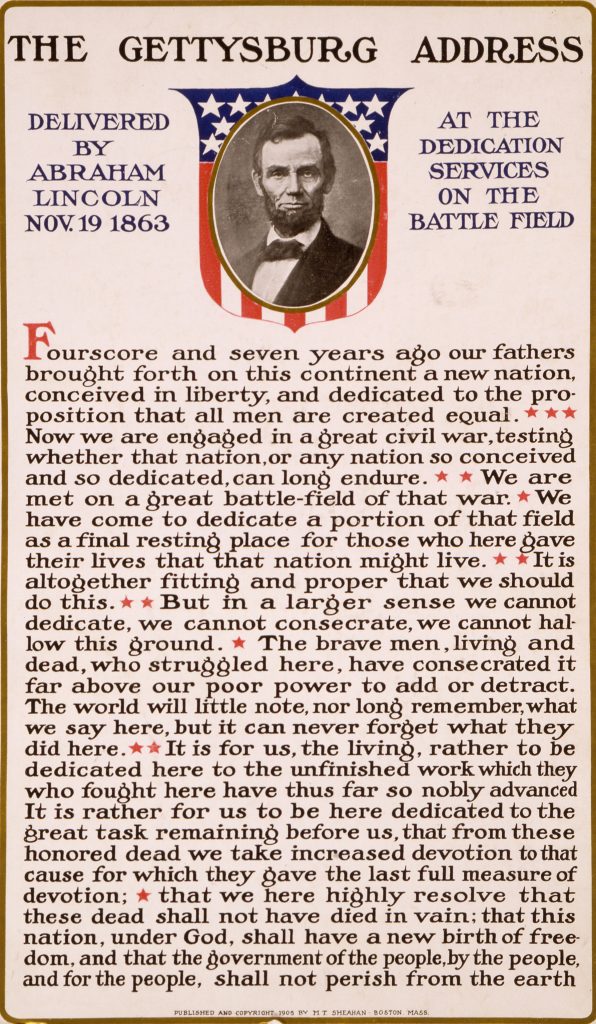

We can break ChatGPT simply by demanding that students directly engage historical sources. Consider the prompt, “Relate the Gettysburg Address to the Declaration of Independence. Is Lincoln expanding on an older vision of the Republic or creating a new one?” ChatGPT generates a compelling simulacrum of a cautious B-student: “the Gettysburg Address can be seen as both an expansion of the principles outlined in the Declaration of Independence and the creation of a new vision for the nation.” But essay never quotes either document and ChatGPT gets confused as soon we push for specifics. Thus, the prompt “When Lincoln declared that ‘all men are created equal’ was he creating a new vision of liberty?” generates the response “Abraham Lincoln did not declare that ‘all men are created equal’; rather, this phrase comes from the United States Declaration of Independence, which was written by Thomas Jefferson in 1776.” This answer is incoherent because ChatGPT3 does not “understand” the meaning of “declare.” It was likely tripped up by a probabilistic association of “declare” with “declaration.”

ChatGPT3 collapses completely when we move beyond canonical sources and press further on specifics. Consider the prompt “Washington’s famous ‘Letter to a Hebrew Congregation in Newport’ is a response to an invitation from that congregation. Using your close reading skills, which aspects of that invitation does Washington engage and which does he ignore?” Here, GPT becomes an expert fabulist. There is no massive internet corpus on the original invitation, so it infers grievances: “For example, the Jewish community in Newport had expressed concerns about their status as a minority group, as well as their economic and social opportunities.” In other iterations, it asserts that Washington ignored a plea to “help secure the rights of all citizens, including those who are marginalized or oppressed.” Those answers have little to do with the primary sources, although they are compelling imitations of a poorly prepared student.

ChatGPT misses core elements of the exchange. For example, the invitation calls for the divine protection of Washington and for his ascent to heaven, but Washington responds modestly and with a broadly ecumenical vision of the afterlife. Even when I pasted the original Newport letter to Washington into ChatGPT, it responded with a boilerplate summary of Washington’s response. It can only write what’s already been written.

I have focused here on American history because in my specialty of Japanese history, where there are comparatively few English-language examples to repurpose, ChatGPT breaks down both rapidly and thoroughly. I asked it about Edogawa Ranpō’s 1925 short story “The Human Chair.” It is a haunting, gothic work about an obsessed, self-loathing craftsman who builds a massive chair for a luxury hotel, conceals himself in it, and then thrills as he becomes living furniture for a cosmopolitan elite. ChatGPT insisted that it was about a man who tried to turn his wife into a chair. ChatGPT didn’t do the assigned reading because it can’t read. That insight applies across fields and disciplines: the algorithm can only write modified versions of what’s already on the web.

Perhaps, in some distant future, ChatGPT 500 (the descendant of ChatGPT 3) will have absorbed everything that has been written or said. Until then, we need merely inflect our questions to move beyond predictable answers. Open ended questions about Rousseau’s Social Contract or Kant’s What is Enlightenment? need to slip into oblivion. But relating any of those canonical texts to non-canonical sources, and insisting on quotes, is a vibrant alternative. How, for example, does this Boston newspaper editorial on the Haitian Revolution relate to Rousseau? Or “Here’s an neglected passage of Spinoza. Relate to it to this well-known passage from Kant.” Such questions stymie ChatGPT3. They can also give our students a better education that is also more true to the objectives of the humanities—teaching students to think for themselves. And they will make teaching more rewarding. At first glance, ChatGPT3 is genuinely scary. But even the scariest gorillas cannot fly or turn sunlight into starch. And, “please get back to me as soon as possible.”

Mark Ravina is the Mitsubishi Heavy Industries Chair in Japanese Studies at the University of Texas at Austin.

[1] https://www.nytimes.com/interactive/2022/12/26/upshot/chatgpt-child-essays.html?searchResultPosition=1

[2] https://www.technologyreview.com/2023/01/31/1067436/could-chatgpt-do-my-job/

[3] https://www.nytimes.com/2022/12/15/opinion/chatgpt-artificial-intelligence.html?searchResultPosition=5

The views and opinions expressed in this article or video are those of the individual author(s) or presenter(s) and do not necessarily reflect the policy or views of the editors at Not Even Past, the UT Department of History, the University of Texas at Austin, or the UT System Board of Regents. Not Even Past is an online public history magazine rather than a peer-reviewed academic journal. While we make efforts to ensure that factual information in articles was obtained from reliable sources, Not Even Past is not responsible for any errors or omissions.