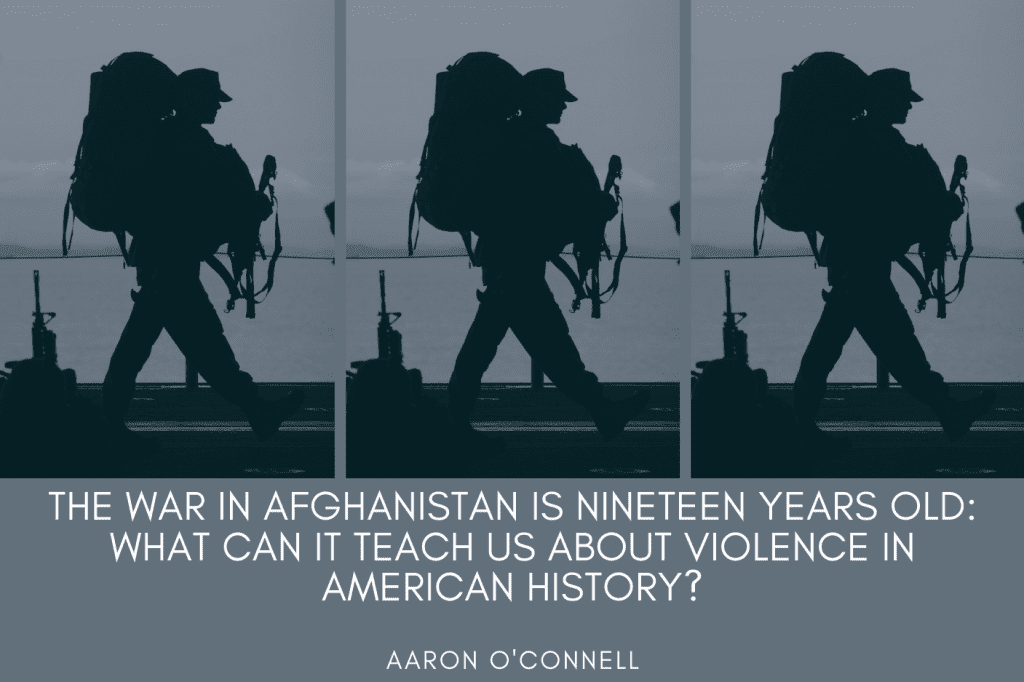

History professors often look for ways to use the past to inform present debates. With long-past events, that sometimes requires some acrobatic leaps over centuries or millennia, but in my own courses on violence in American history, the connections are often pretty obvious. Every day, a stream of new or ongoing violent events invite historical consideration: years-long wars in Iraq, Afghanistan, and Syria; regular drone strikes in Yemen, Somalia and Libya; armed militia groups parading in America’s streets; mass shootings and regular evidence of police misconduct; and violent demonstrations in America’s cities, including right here in Austin. I often ask my students: Do any of these events have historical precedents worth knowing about? Are there any long-standing structural forces at work that that help explain how violence has operated in American society?

For those of us old enough to remember the Cold War – or the Rodney King riots, the Oklahoma City bombing, or the surge of militia movements in the 1990s – the answer to both of these questions is a pretty emphatic “yes.” But the first-year students in my War and Violence in American History course often know little about any of these events beyond the fact that some of them happened. (Don’t judge – how thoughtful about US history were you when you were 18?) Of course, for historians, knowing an event occurred is just the starting point. We strive to understand the surrounding historical context and we probe for causes, both particular and structural: What were the drivers of these events? Why did they happen when they did? Are there previous events that seem to have ignited from a similar spark?

When I ask these questions in my classes, the conversation moves quickly away from the present and back into the past: “Why are people protesting against police misconduct in Austin?” turns into “Has this happened before? How many protests have been sparked by police misconduct in previous eras?” Discussions of terrorism move from “Why would terrorists attack civilians?” to “How should we think about the Sons of Liberty or the Sullivan Expedition in the Revolutionary War? In all of these discussions, the educational value comes not just from knowing what happened in the past, but in understanding how those events shaped later laws, policies, culture, decisions over resources, and the ideological frames that we use to make sense of the present.

To take my students through the long history of violence in America, I use a book that has been in the news lately: Howard Zinn’s A People’s History of the United States. This book is well-known – even controversial, both inside academia and out – partly because Zinn tackles some of the sacred cows of America’s national mythos: Is Christopher Columbus better remembered as a genius sailor or a genocidaire? What does it say about the progression of liberty that single women in New Jersey had the vote until 1807, when it was stripped from them by an all-male state legislature? Most important: why have so many history books focused almost exclusively on the stories of white, wealthy men whose total numbers have never approached half – or even a quarter – of the country’s total population?

Zinn’s answer to all of these questions is that there have always been long-standing structural inequalities in American society that have shaped everything from the writing of laws, to the ways they are interpreted, to the stories we tell today about the nation’s past. It is nice to think of America as one big family, Zinn explains, but telling the story that way conceals fierce conflicts in that family’s history “between conquerors and conquered, masters and slaves, capitalists and workers, dominators and dominated in race and sex. And in such a world of conflict, a world of victims and executioners, it is the job of thinking people, as Albert Camus suggested, not to be on the side of the executioners.”[1]

Is telling America’s story primarily from the perspective of victims “biased” history? Of course it is – and you’ll never find a history book that isn’t. Writing history means making an argument, which necessarily means making choices about which stories to tell and whose voices to include. No historian can include every relevant event or every history book would be an even more boring version of James Joyce’s Ulysses, with thousands of pages devoted to explaining a single day or decision. The key to good history is to make those choices judiciously, acknowledge conflicting evidence, and support one’s analytical claims with relevant historical facts and quotes from the people who were there at the time. (It helps to tell an entertaining story too, and that’s usually harder when telling people about things they’d rather not confront.)

Zinn not only understands that writing history involves picking and choosing – he explains it to his readers in his very first chapter. “In the inevitable taking of sides which comes from selection and emphasis in history, I prefer to try to tell the story of the discovery of America from the viewpoint of the Arawaks, of the Constitution from the standpoint of the slaves, of Andrew Jackson as seen by the Cherokees, of the Civil War as seen by the New York Irish, of the Mexican war as seen by the deserting soldiers of Scott’s Army, of the rise of industrialism as seen by the young women in the Lowell textile mills,” and so on.[2] He focuses on these perspectives precisely because he did not learn about them in his own undergraduate education in the 1950s, and because he thinks they are too important to ignore. That’s a bias, to be sure, but is it any more biased than the many histories that ignored such perspectives? By what measure?

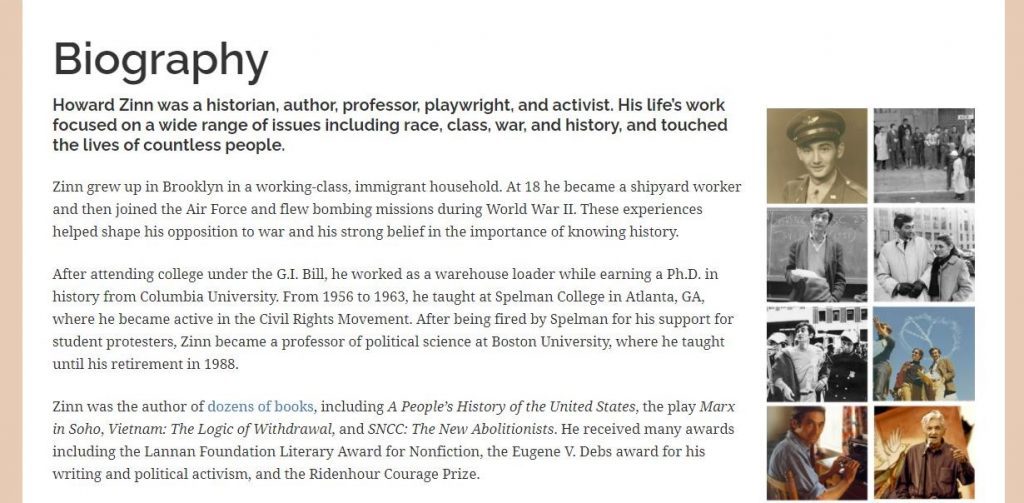

Beyond the question of bias, I often ask whether focusing on the stories of the victims of violence and oppression is “good history”? My students debate this question every week. And, to be clear, they don’t only read Howard Zinn. They also read critiques of Zinn, methodological pieces, and primary sources that either reinforce or complicate the arguments Zinn makes. I also invite my students to question my motives. After all, I assigned the readings – do you think I am pushing my ideas on them for some ulterior purpose? That conversation sometimes gets pretty animated when they learn I spent a quarter of a century in the Marine Corps and that Howard Zinn was himself a World War II combat veteran. Are veterans more likely or less likely to criticize the U.S. government and its foreign policies?, I usually retort. How could you research that question? Do veterans have more authority to speak on these issues than professional historians who haven’t served in the Armed Forces? Why or why not? Which credentials do you think matter most for explaining the past or commenting on issues in the present?

Not everyone likes Zinn’s book. I have to admit that it is a sometimes depressing read. But my students are usually united in the belief that it is important to know about the ugliest parts of America’s past. We don’t focus on such moments to “make students ashamed of their own history,” or to “destroy our country,” as President Trump claimed at a White House Conference on American History last month. Rather, my goal – and I think, Howard Zinn’s as well – is to invite students to confront the contradictions between rhetoric and reality in the American experience – contradictions that were present at the creation of the United States and which continue, even now, to undermine the government’s fundamental purpose to “establish justice, insure domestic tranquility, provide for the common defense, promote the general welfare, and secure the blessings of liberty to ourselves and our posterity.”

Every semester I ask my students if the laudable goals set down in the Constitution’s preamble have finally been fully achieved. No one thinks they have. If you do, how do explain the rather startling absence of domestic tranquility or a shared sense of justice across the country today? And that’s why I’ll continue to teach and talk about A People’s History of the United States in the future. Doing so certainly won’t destroy our country. Quite the opposite: I believe such conversations are a necessary and urgent component of its healing.

Aaron O’Connell is Associate Professor of History at the University of Texas at Austin. Follow him on Twitter @OConnellAaronB.

[1] Howard Zinn, A People’s History of the United States (Harper Perennial Modern Classics, 2003), 10.

[2] Ibid., 10.